A Snapshot of Software Decline

Software has steadily been getting worse. Here’s what it looks like and why.

This was originally penned on the 5th day of March, 2021. It was a Friday. It has been reprinted here for posterity and/or austerity (whichever you prefer). Enjoy.

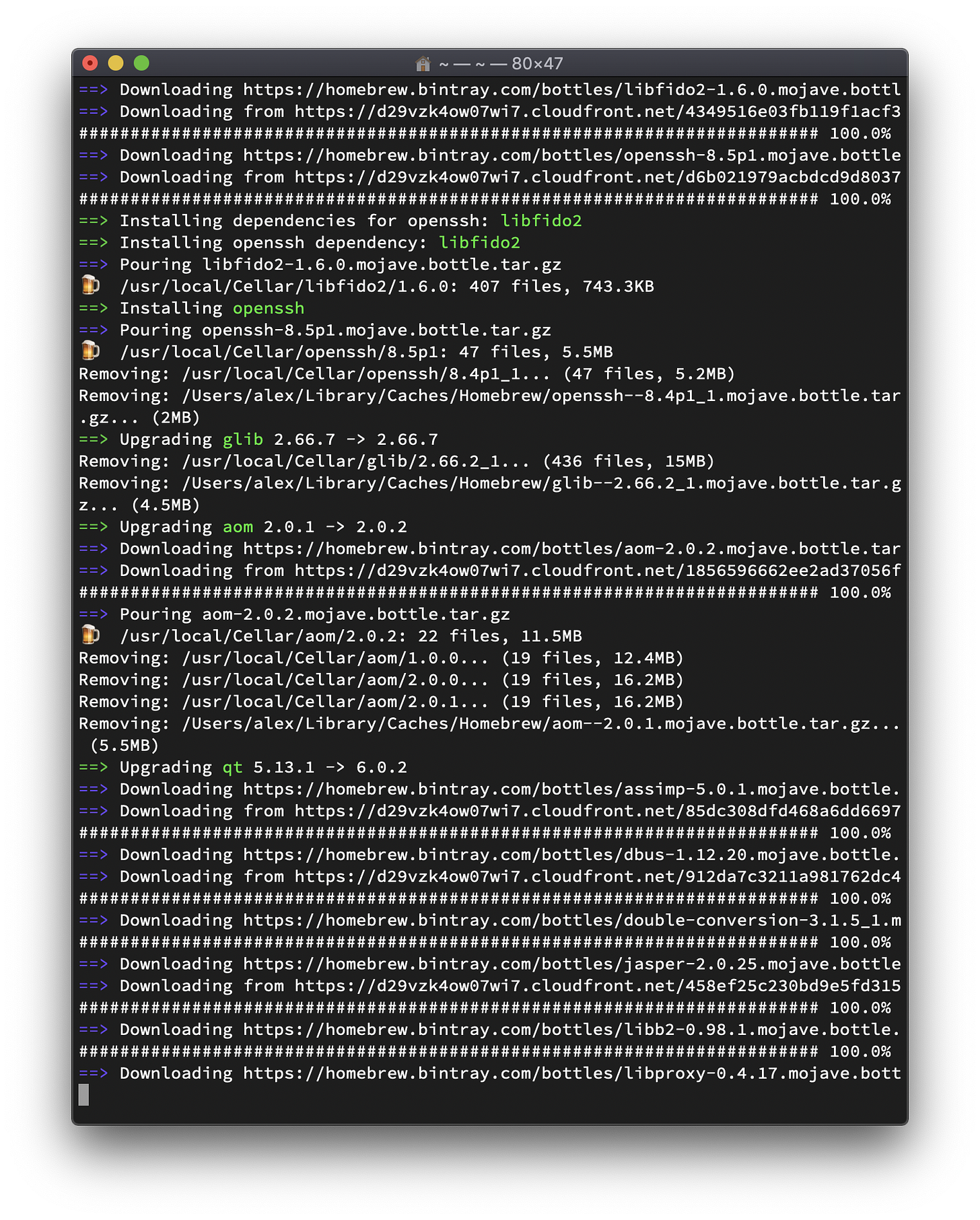

Today I performed a run of brew upgrade on my late 2019 MacBook Pro, starting at about 21:30 local time. For the next two hours it turned my MacBook into a proverbial firecracker, making even the touch bar almost too hot to touch, and as of this writing at 23:09, it is still going, because for some reason Homebrew concluded that it needs to recompile native-targeting GCC 8 from source. I am most likely to leave it on the charger and let it do that after I head to bed. I do not really care.

Terminal window with Homebrew plugging away. This is what is cooking my MacBook for hours on end.

How did things get like this? Well, much like the late Roman Empire, there is no one particular event one can point to, despite what public schools and pop science fiends might say. The trials and tribulations of Alaric alone hardly explain such a state of affairs. What is clear, however, is that something somewhere has gone terribly wrong.

As I outlined in the Ethos for Sustainable Computing, there are two kinds of complexities in the development of software: material complexity and immaculate complexity. In a nutshell, copious amounts of the latter are almost entirely to credit and/or blame for the qualitative decline of software as we know it today.

I am not the first to identify this problem in the wild, but rather the first to give it such terms. Many programmers years and decades before me have taken note of the phenomenon of software bloat – where software gets bigger and more unwieldy at a pace set by Moore’s Law. As that Law comes to a close, and as ever more resources are given to software for its own sake, it is time to dispose of this aphorism and delve a little deeper to get at the heart of the problem: manifest gross incompetence.

There is an overwhelming amount of incompetence in the world of software engineering and computer science. The reason for this is simple: unlike more mature fields of study, computer science has not laid a bedrock-solid foundation of fundamentals in any meaningful sense. The unrelated decline of academia has only exacerbated this, as those institutions responsible for caring about scholastic excellence and research for its own sake are out on an extended free lunch. Conversely, a great deal of my own research has revolved around exploring the fundamentals of programming. The core mechanism behind all of my work is a well-cultivated corpus of patterns and strategies, applied directly instead of through the opaque tooling of genericism.

Meanwhile, genericism has become the ruling pillar of all major efforts to effect progress in programming language theory. The result is a vast legion of developers struggling ferociously to understand such general concepts, and often failing miserably despite their valiance. There is likely a positive feedback loop at work with this. In general, nobody is teaching these things. Those who know these things are few and far between, assume the relevant people either already know or can figure it out on their own, and are usaully not inclined towards teaching anyone about it beyond writing documentation or promoting their works. The knowledge is on the verge of being lost.

The brew update command above is one picture of what the results of all these look like in the wild. It does all sorts of things, according to some heuristic or strategy decided once a long time ago by someone nobody knows and never revisited by anybody, and it’s good enough for now, I mean it works doesn’t it, so what’s there to really complain about, except the fact that nobody comprehensively knows what it’s doing, and that it consumes a ton of resources, I mean just buy a beefier computer right? Is my year-and-a-half-old MacBook Pro really that outdated? This is but one issue, as brew fetches all kinds of sources from Cloudflare colocated servers, which no doubt run on containers who-knows-how-many-levels deep, through who-knows-how-many transparent proxies, taking up who-knows-how-much resources, because what does it really matter, I mean it’s all billed to somebody at the end of the day, so if it’s paid for who’s complaining, except the users who have to support all that complexity with their machines, and the people paying for it if they ever found out, but that’s probably somebody else so who cares, what’s the problem?

The issue with the aphorism about software bloat is that it doesn’t come with any instructions or ethos about how to fix it. The typical real-world answer is sadly some developer plugging away writing a malloc() implementation because they wouldn’t recognise good-enough-for-now if they had to leave the house on a rainy day. It also holds developers totally blameless in creating a problem that, by now, is squarely our fault, perpetuated through hubris and impostor syndrome about our overwhelming lack of knowledge. The common wisdom is that this is all the fault of pesky, ignorant managers domineering all the things, asking for the sky on a silver platter, and not listening to the sage developers about what really matters. No, I have the courage to say about this complexity what most other people do not: it’s bad, it’s our fault and no one else’s, and it needs to be cut out.

It’s bad that Homebrew uses servers with containers. It’s bad that it has to do so much thinking to parse pre-built packages. It’s bad that it forces users to write Ruby for package definitions. It’s bad that it will fall back to building from source for unknown reasons. It’s bad that it depends on the modern Worldwide Web infrastructure as we know it today, unable to meet any meaningful performance demands from a mere twenty years ago. This should be obvious to everyone, even the most tech-illiterate grandparent.

The only reason these things are bad is because all of them have an inherent cost. This is where other more robust package managers familiar in the Linux world begin to share the blame. The only absolute in play here is that the software is complicated. Everything else is contingent on context, but it takes more brainpower to work through and understand, on top of the fact that almost no one fundamentally understands anything to begin with. Bintray usage is worth it for the tiny minority of obscenely rich Silicon Valley mega-corporations who would, in aggregate, have a net benefit from it. For everyone else, it’s a total loss in time and energy. It could have been uploaded to FTP, or even shared as a torrent, paid for collectively. The same cost-benefit goes for almost everything else, and sadly, this problem is endemic. Projects wishing to turn this tide will have to start back at square one, which is not coincidentally what the more recent projects from Aquefir are about.

It’s time to put an end to so much immaculate complexity. Things do not need to be so hard to understand. If we can come to understand the software, truly comprehending it inside and out, we can easily optimise and improve upon it. Let’s work towards the end of bad code as we know it. No more raging against the machine.

Hey! Thanks for reading. This one is a republishing, so it’s a free read, as before. I run this Substack to help break myself out of relative poverty and earn the white collar lifestyle I was not endowed with growing up. It’s $5.55/month to subscribe, or $55.55/year. That’s like the Interstella movie, or something. Think Daft Punk. Totally worth it.